while relu is suddenly zero in negative input ranges, gelu is much smoother in this region. Like relu, gelu as no upper bound and bounded below. Other approaches have won subsequent competitions.Despite introduced earlier than relu, in DL literature its popularity came after relu due to its characteristics that compensate for the drawbacks of relu.

#SWISH ACTIVATION SERIES#

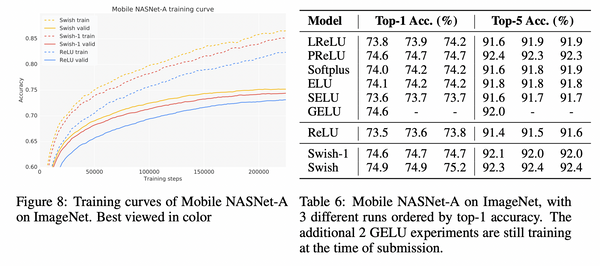

There are also convolution kernels and pooling layers in the AlexNet approach's series of layers used by them, and the design has entered common use since their winning of the ImageNet competition in 2012. The internal layers are pure ReLU and the output layer is Softmax. The ReLU non-linearity is applied to the output of every convolutional and fully-connected layer. The output of the last fully-connected layer is fed to a 1000-way softmax which produces a distribution over the 1000 class labels. Hinton from the University of Toronto, does not involve combining activation functions to form new ones. To correct any misconceptions that may arise from another answer, AlexNet, the name given to the approach outlined in ImageNet Classification with Deep Convolutional Neural Networks (2012) by Alex Krizhevsky, Ilya Sutskever, and Geoffrey E. Those who follow the theory of use case analysis pioneered by Swedish computer scientist Ivar Hjalmar Jacobson or 6 Sigma ideas would say that these tests are unit test, not functional tests against real world use cases, and they have a point. Whether these traditions create a bias is another question. It is, however, very common to use different activation functions in different layers of a single, effective network design. For instance, AlexNet does not combine them. That's why new ideas are tested against data sets traditionally used to gauge usefulness 1.Ĭombining activation functions to form new activation functions is not common. Whether the change is significant cannot be generalized. tends to work better than ReLU on deeper models across a number of challenging datasets.Īny change in activation function to any one layer will, except in the astronomically rare case, impact accuracy, reliability, and computational efficiency. Our experiments show that the best discovered activation function. The originally anonymously submitted paper (for double blind review as a ICLR 2018 paper), Searching for Activation Functions, was authored by Prajit Ramachandran, Barret Zoph, and Quoc V. It does not appear to be developed by Google. It is formed through the addition of a hyper-parameter $\beta$ inside the sigmoid function and a multiplication of the input to the sigmoid function result.

Note that Swish is not strictly a combination of activation functions. $$f(x) = x \, \mathbb$ is the sigmoid function. This appears to be true of Swish, the name given to the activation function defined as The exception to such increase will be when the computational burden of the combination is small compared to the convergence advantages the combination provides. The general answer to the behavior of combining common activation functions is that the laws of calculus must be applied, specifically differential calculus, the results must be obtained through experiment to be sure of the qualities of the assembled function, and the additional complexity is likely to increase computation time.

0 kommentar(er)

0 kommentar(er)